Table of contents

In this course, we are going to learn about Polling, Http2 push, Web sockets, and Socket io. When we talk about real-time, we mean that we have some sort of server state and some sort of client state and we want them to be the same. Whether that is a chat client, which is what we are going to be doing today. Sending messages back and forth and if I send a message, I want everyone to see it. So that would be me sending a message to the server and the server broadcasting that. To follow along, you should understand Javascript and Node JS.

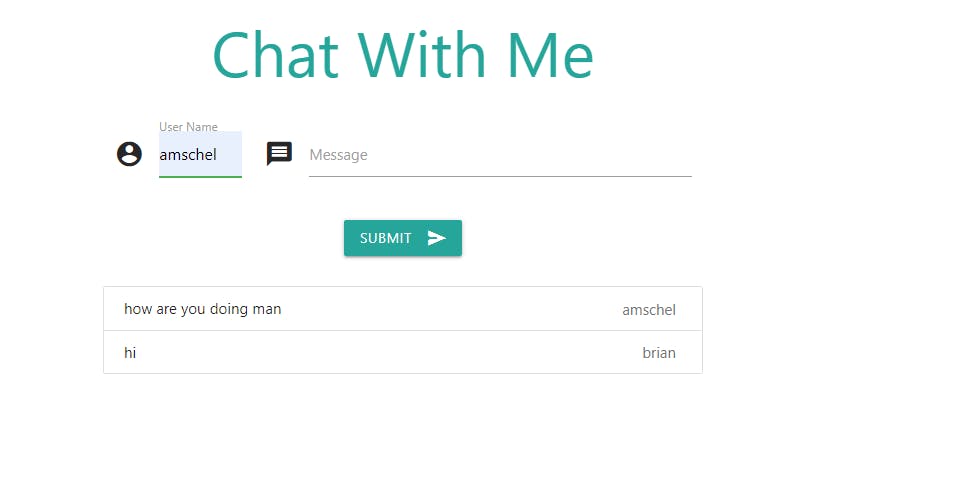

POLLING

HTTP Polling is a mechanism where the client keeps requesting the resource at regular intervals. If the resource is available, the server sends the resource as part of the response. If the resource is not available, the server returns an empty response back to the client. The backend setup is no different than a normal express server.

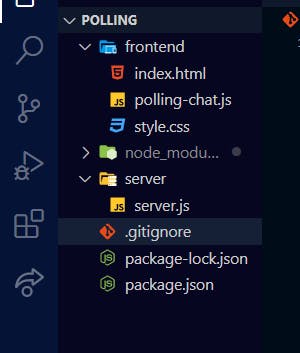

FOLDER STRUCTURE

To follow along, git clone https://github.com/amschel99/polling.git

cd polling

npm install

npm start

Your chat client will be displayed on port 3000

BACKEND OVERVIEW

The Backend is no different than a typical express server

import express from "express";

import bodyParser from "body-parser";

import nanobuffer from "nanobuffer";

import morgan from "morgan";

// set up a limited array

const msg = new nanobuffer(50);

const getMsgs = () => Array.from(msg).reverse();

// feel free to take out, this just seeds the server with at least one message

msg.push({

user: "brian",

text: "hi",

time: Date.now(),

});

// get express ready to run

const app = express();

app.use(morgan("dev"));

app.use(bodyParser.json());

app.use(express.static("frontend"));

app.get("/poll", function (req, res) {

res.json({msg:getMsgs()})

});

app.post("/poll", function (req, res) {

const{user, text}=req.body

msg.push(

{

user,

text,

time: Date.now()

}

)

res.json({

status:'OK'

})

});

// start the server

const port = process.env.PORT || 3000;

app.listen(port);

console.log(`listening on http://localhost:${port}`);

We use nanobuffer package to set a limited array.

getMsgs() returns the messages in the array in reverse order(returns the latest messages to be pushed)

The /poll endpoint sends all the messages in the msg array to the client when the client makes

a GET request.

The /poll endpoint pushes the req.body object to the msg array when the client makes a POST request.

THE CLIENT

const chat = document.getElementById("chat");

const msgs = document.getElementById("msgs");

// let's store all current messages here

let allChat = [];

// the interval to poll at in milliseconds

const INTERVAL = 3000;

// a submit listener on the form in the HTML

chat.addEventListener("submit", function (e) {

e.preventDefault();

postNewMsg(chat.elements.user.value, chat.elements.text.value);

chat.elements.text.value = "";

});

async function postNewMsg(user, text) {

const data={user,text}

const options={

method:'POST',

body:JSON.stringify(data),

headers:{

'Content-type':'application/json',

}

}

await fetch('/poll',options)

}

async function getNewMsgs() {

let json;

try{

const res= await fetch('/poll')

json= await res.json()

if(res.status>=400){

throw new Error(`request did not succeed ${res.status}`)

}

allChat=json.msg;

render()

failedTries=0

}

catch(e){

console.error(` polling error ${e}`)

failedTries++

}

}

function render() {

// as long as allChat is holding all current messages, this will render them

// into the ui. yes, it's inefficent. yes, it's fine for this example

const html = allChat.map(({ user, text, time, id }) =>

template(user, text, time, id)

);

msgs.innerHTML = html.join("\n");

}

// given a user and a msg, it returns an HTML string to render to the UI

const template = (user, msg) =>

`<li class="collection-item"><span class="badge">${user}</span>${msg}</li>`;

// make the first request

const BACKOFF=5000

let failedTries=0

let timeToMakeNextRequest=0

async function rafTimer(time){

if(timeToMakeNextRequest<=time){

await getNewMsgs();

timeToMakeNextRequest=time +INTERVAL + failedTries *BACKOFF

}

requestAnimationFrame(rafTimer)

}

requestAnimationFrame(rafTimer)

Basically getNewMsgs is a function that makes a GET request to the /poll endpoint,

gets the array of messages and displays them.

Let's take a look at the requestAnimationFrame function

const BACKOFF=5000

let failedTries=0

let timeToMakeNextRequest=0

async function rafTimer(time){

if(timeToMakeNextRequest<=time){

await getNewMsgs();

timeToMakeNextRequest=time +INTERVAL + failedTries *BACKOFF

}

requestAnimationFrame(rafTimer)

}

requestAnimationFrame(rafTimer)

The time parameter of the rafTimer() function is equivalent to what is returned by performance.now().

The returned value represents the time elapsed since the time origin.

if(timeToMakeNextRequest<=time){

await getNewMsgs();

timeToMakeNextRequest=time +INTERVAL + failedTries *BACKOFF

}

The getNewMsgs() is called if it is timeToMakeNextRequest and the timeToMakeNextRequest variable is updated by adding the Interval and applying the linear backoff algorithm.

THE LINEAR BACKOFF ALGORITHM

Basically what is happening is this, if the request fails once, `failedTries will be incremented to 1 and the client will try to request again after 1*5000 milliseconds.

if the request fails twice, `failedTries will be incremented to 2 and the client will try to request again after 2 * 5000 milliseconds

A retry immediately when the request fails can increase the load on the system being called, if the system is already failing because it's approaching an overload. To avoid this problem, we implement our client to use backoff. This increases the time between subsequent retries, which keeps the load on the backend even.